The Team

The AI group at the institute brings together experts with backgrounds in both industry and academia. We place equal emphasis on theoretical foundations, thorough experimentation, and practical applications. Our close collaboration ensures a continuous exchange of knowledge between scientific research and applied projects.

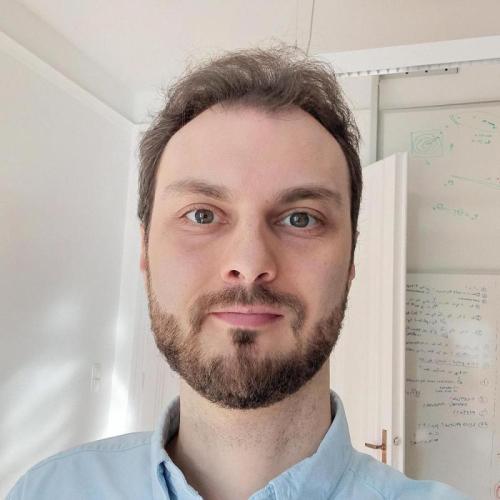

Balázs Szegedy

Mathematical Theory

Attila Börcs, PhD

NLP, Modeling, MLOps

Adrián Csiszárik

Representation Learning, Foundations

Domonkos Czifra

NLP, Foundations

Péter Kőrösi-Szabó

Modeling

Gábor Kovács

NLP, Modeling

Judit Laki, MD PhD

Healthcare

Márton Muntag

Time Series, NLP, Modeling

Dávid Terjék

Generalization, Mathematical Theory

Dániel Varga

Foundations, Computer aided proofs

Pál Zsámboki

Reinforcement Learning, Geometric Deep Learning

Zsolt Zombori

Formal Reasoning

Péter Ágoston

Combinatory, Geometry

Beatrix Mária Benkő

Representation Learning

Diego González Sánchez

Generalization, Mathematical Theory

Melinda F. Kiss

Representation Learning

Ákos Matszangosz

Topology, Foundations